Frameworks

WebdriverIO Runner has built-in support for Mocha, Jasmine, and Cucumber.js. You can also integrate it with 3rd-party open-source frameworks, such as Serenity/JS.

To integrate WebdriverIO with a test framework, you need an adapter package available on NPM. Note that the adapter package must be installed in the same location where WebdriverIO is installed. So, if you installed WebdriverIO globally, be sure to install the adapter package globally, too.

Integrating WebdriverIO with a test framework lets you access the WebDriver instance using the global browser variable

in your spec files or step definitions.

Note that WebdriverIO will also take care of instantiating and ending the Selenium session, so you don't have to do it

yourself.

Using Mocha

First, install the adapter package from NPM:

- npm

- Yarn

- pnpm

npm install @wdio/mocha-framework --save-dev

yarn add @wdio/mocha-framework --dev

pnpm add @wdio/mocha-framework --save-dev

By default WebdriverIO provides an assertion library that is built-in which you can start right away:

describe('my awesome website', () => {

it('should do some assertions', async () => {

await browser.url('https://webdriver.io')

await expect(browser).toHaveTitle('WebdriverIO · Next-gen browser and mobile automation test framework for Node.js | WebdriverIO')

})

})

WebdriverIO supports Mocha's BDD (default), TDD, and QUnit interfaces.

If you like to write your specs in TDD style, set the ui property in your mochaOpts config to tdd. Now your test files should be written like this:

suite('my awesome website', () => {

test('should do some assertions', async () => {

await browser.url('https://webdriver.io')

await expect(browser).toHaveTitle('WebdriverIO · Next-gen browser and mobile automation test framework for Node.js | WebdriverIO')

})

})

If you want to define other Mocha-specific settings, you can do it with the mochaOpts key in your configuration file. A list of all options can be found on the Mocha project website.

Note: WebdriverIO does not support the deprecated usage of done callbacks in Mocha:

it('should test something', (done) => {

done() // throws "done is not a function"

})

Mocha Options

The following options can be applied in your wdio.conf.js to configure your Mocha environment. Note: not all options are supported, e.g. applying the parallel option will cause an error as the WDIO testrunner has its own way to run tests in parallel. The following options however are supported:

require

The require option is useful when you want to add or extend some basic functionality (WebdriverIO framework option).

Type: string|string[]

Default: []

compilers

Use the given module(s) to compile files. Compilers will be included before requires (WebdriverIO framework option).

Type: string[]

Default: []

allowUncaught

Propagate uncaught errors.

Type: boolean

Default: false

bail

Bail after first test failure.

Type: boolean

Default: false

checkLeaks

Check for global variable leaks.

Type: boolean

Default: false

delay

Delay root suite execution.

Type: boolean

Default: false

fgrep

Test filter given string.

Type: string

Default: null

forbidOnly

Tests marked only fail the suite.

Type: boolean

Default: false

forbidPending

Pending tests fail the suite.

Type: boolean

Default: false

fullTrace

Full stacktrace upon failure.

Type: boolean

Default: false

global

Variables expected in global scope.

Type: string[]

Default: []

grep

Test filter given regular expression.

Type: RegExp|string

Default: null

invert

Invert test filter matches.

Type: boolean

Default: false

retries

Number of times to retry failed tests.

Type: number

Default: 0

timeout

Timeout threshold value (in ms).

Type: number

Default: 30000

Using Jasmine

First, install the adapter package from NPM:

- npm

- Yarn

- pnpm

npm install @wdio/jasmine-framework --save-dev

yarn add @wdio/jasmine-framework --dev

pnpm add @wdio/jasmine-framework --save-dev

You can then configure your Jasmine environment by setting a jasmineOpts property in your config. A list of all options can be found on the Jasmine project website.

Intercept Assertion

The Jasmine framework allows it to intercept each assertion in order to log the state of the application or website, depending on the result.

For example, it is pretty handy to take a screenshot every time an assertion fails. In your jasmineOpts you can add a property called expectationResultHandler that takes a function to execute. The function’s parameters provide information about the result of the assertion.

The following example demonstrates how to take a screenshot if an assertion fails:

jasmineOpts: {

defaultTimeoutInterval: 10000,

expectationResultHandler: function(passed, assertion) {

/**

* only take screenshot if assertion failed

*/

if(passed) {

return

}

browser.saveScreenshot(`assertionError_${assertion.error.message}.png`)

}

},

Note: You cannot stop test execution to do something async. It might happen that the command takes too much time and the website state has changed. (Though usually, after another 2 commands the screenshot is taken anyway, which still gives some valuable information about the error.)

Jasmine Options

The following options can be applied in your wdio.conf.js to configure your Jasmine environment using the jasmineOpts property. For more information on these configuration options, check out the Jasmine docs.

defaultTimeoutInterval

Default Timeout Interval for Jasmine operations.

Type: number

Default: 60000

helpers

Array of filepaths (and globs) relative to spec_dir to include before jasmine specs.

Type: string[]

Default: []

requires

The requires option is useful when you want to add or extend some basic functionality.

Type: string[]

Default: []

random

Whether to randomize spec execution order.

Type: boolean

Default: true

seed

Seed to use as the basis of randomization. Null causes the seed to be determined randomly at the start of execution.

Type: Function

Default: null

failSpecWithNoExpectations

Whether to fail the spec if it ran no expectations. By default a spec that ran no expectations is reported as passed. Setting this to true will report such spec as a failure.

Type: boolean

Default: false

oneFailurePerSpec

Whether to cause specs to only have one expectation failure.

Type: boolean

Default: false

specFilter

Function to use to filter specs.

Type: Function

Default: (spec) => true

grep

Only run tests matching this string or regexp. (Only applicable if no custom specFilter function is set)

Type: string|Regexp

Default: null

invertGrep

If true it inverts the matching tests and only runs tests that don't match with the expression used in grep. (Only applicable if no custom specFilter function is set)

Type: boolean

Default: false

Using Cucumber

First, install the adapter package from NPM:

- npm

- Yarn

- pnpm

npm install @wdio/cucumber-framework --save-dev

yarn add @wdio/cucumber-framework --dev

pnpm add @wdio/cucumber-framework --save-dev

If you want to use Cucumber, set the framework property to cucumber by adding framework: 'cucumber' to the config file .

Options for Cucumber can be given in the config file with cucumberOpts. Check out the whole list of options here.

To get up and running quickly with Cucumber, have a look on our cucumber-boilerplate project that comes with all the step definitions you need to get stared, and you'll be writing feature files right away.

Cucumber Options

The following options can be applied in your wdio.conf.js to configure your Cucumber environment using the cucumberOpts property:

backtrace

Show full backtrace for errors.

Type: Boolean

Default: true

requireModule

Require modules prior to requiring any support files.

Type: string[]

Default: []

Example:

cucumberOpts: {

requireModule: ['@babel/register']

// or

requireModule: [

[

'@babel/register',

{

rootMode: 'upward',

ignore: ['node_modules']

}

]

]

}

failFast

Abort the run on first failure.

Type: boolean

Default: false

names

Only execute the scenarios with name matching the expression (repeatable).

Type: RegExp[]

Default: []

require

Require files containing your step definitions before executing features. You can also specify a glob to your step definitions.

Type: string[]

Default: []

Example:

cucumberOpts: {

require: [path.join(__dirname, 'step-definitions', 'my-steps.js')]

}

import

Paths to where your support code is, for ESM.

Type: String[]

Default: []

Example:

cucumberOpts: {

import: [path.join(__dirname, 'step-definitions', 'my-steps.js')]

}

strict

Fail if there are any undefined or pending steps.

Type: boolean

Default: false

tags

Only execute the features or scenarios with tags matching the expression. Please see the Cucumber documentation for more details.

Type: String

Default: ``

timeout

Timeout in milliseconds for step definitions.

Type: Number

Default: 30000

retry

Specify the number of times to retry failing test cases.

Type: Number

Default: 0

retryTagFilter

Only retries the features or scenarios with tags matching the expression (repeatable). This option requires '--retry' to be specified.

Type: RegExp

language

Default language for your feature files

Type: String

Default: en

order

Run tests in defined / random order

Type: String

Default: defined

format

Name and output file path of formatter to use. WebdriverIO primarily supports only the Formatters that writes output to a file.

Type: string[]

formatOptions

Options to be provided to formatters

Type: object

tagsInTitle

Add cucumber tags to feature or scenario name

Type: Boolean

Default: false

Please note that this is a @wdio/cucumber-framework specific option and not recognized by cucumber-js itself

ignoreUndefinedDefinitions

Treat undefined definitions as warnings.

Type: Boolean

Default: false

Please note that this is a @wdio/cucumber-framework specific option and not recognized by cucumber-js itself

failAmbiguousDefinitions

Treat ambiguous definitions as errors.

Type: Boolean

Default: false

Please note that this is a @wdio/cucumber-framework specific option and not recognized by cucumber-js itself

tagExpression

Only execute the features or scenarios with tags matching the expression. Please see the Cucumber documentation for more details.

Type: String

Default: ``

Please note that this option would be deprecated in future. Use tags config property instead

profile

Specify the profile to use.

Type: string[]

Default: []

Kindly take note that only specific values (worldParameters, name, retryTagFilter) are supported within profiles, as cucumberOpts takes precedence. Additionally, when using a profile, make sure that the mentioned values are not declared within cucumberOpts.

Skipping tests in cucumber

Note that if you want to skip a test using regular cucumber test filtering capabilities available in cucumberOpts, you will do it for all the browsers and devices configured in the capabilities. In order to be able to skip scenarios only for specific capabilities combinations without having a session started if not necessary, webdriverio provides the following specific tag syntax for cucumber:

@skip([condition])

were condition is an optional combination of capabilities properties with their values that when all matched with cause the tagged scenario or feature to be skipped. Of course you can add several tags to scenarios and features to skip a tests under several different conditions.

You can also use the '@skip' annotation to skip tests without changing `tagExpression'. In this case the skipped tests will be displayed in the test report.

Here you have some examples of this syntax:

@skipor@skip(): will always skip the tagged item@skip(browserName="chrome"): the test will not be executed against chrome browsers.@skip(browserName="firefox";platformName="linux"): will skip the test in firefox over linux executions.@skip(browserName=["chrome","firefox"]): tagged items will be skipped for both chrome and firefox browsers.@skip(browserName=/i.*explorer/): capabilities with browsers matching the regexp will be skipped (likeiexplorer,internet explorer,internet-explorer, ...).

Import Step Definition Helper

In order to use step definition helper like Given, When or Then or hooks, you are suppose to import then from @cucumber/cucumber, e.g. like this:

import { Given, When, Then } from '@cucumber/cucumber'

Now, if you use Cucumber already for other types of tests unrelated to WebdriverIO for which you use a specific version you need to import these helpers in your e2e tests from the WebdriverIO Cucumber package, e.g.:

import { Given, When, Then } from '@wdio/cucumber-framework'

This ensures that you use the right helpers within the WebdriverIO framework and allows you to use an independent Cucumber version for other types of testing.

Publishing Report

Cucumber provides a feature to publish your test run reports to https://reports.cucumber.io/, which can be controlled either by setting the publish flag in cucumberOpts or by configuring the CUCUMBER_PUBLISH_TOKEN environment variable. However, when you use WebdriverIO for test execution, there's a limitation with this approach. It updates the reports separately for each feature file, making it difficult to view a consolidated report.

To overcome this limitation, we've introduced a promise-based method called publishCucumberReport within @wdio/cucumber-framework. This method should be called in the onComplete hook, which is the optimal place to invoke it. publishCucumberReport requires the input of the report directory where cucumber message reports are stored.

You can generate cucumber message reports by configuring the format option in your cucumberOpts. It's highly recommended to provide a dynamic file name within the cucumber message format option to prevent overwriting reports and ensure that each test run is accurately recorded.

Before using this function, make sure to set the following environment variables:

- CUCUMBER_PUBLISH_REPORT_URL: The URL where you want to publish the Cucumber report. If not provided, the default URL 'https://messages.cucumber.io/api/reports' will be used.

- CUCUMBER_PUBLISH_REPORT_TOKEN: The authorization token required to publish the report. If this token is not set, the function will exit without publishing the report.

Here's an example of the necessary configurations and code samples for implementation:

import { v4 as uuidv4 } from 'uuid'

import { publishCucumberReport } from '@wdio/cucumber-framework';

export const config = {

// ... Other Configuration Options

cucumberOpts: {

// ... Cucumber Options Configuration

format: [

['message', `./reports/${uuidv4()}.ndjson`],

['json', './reports/test-report.json']

]

},

async onComplete() {

await publishCucumberReport('./reports');

}

}

Please note that ./reports/ is the directory where cucumber message reports will be stored.

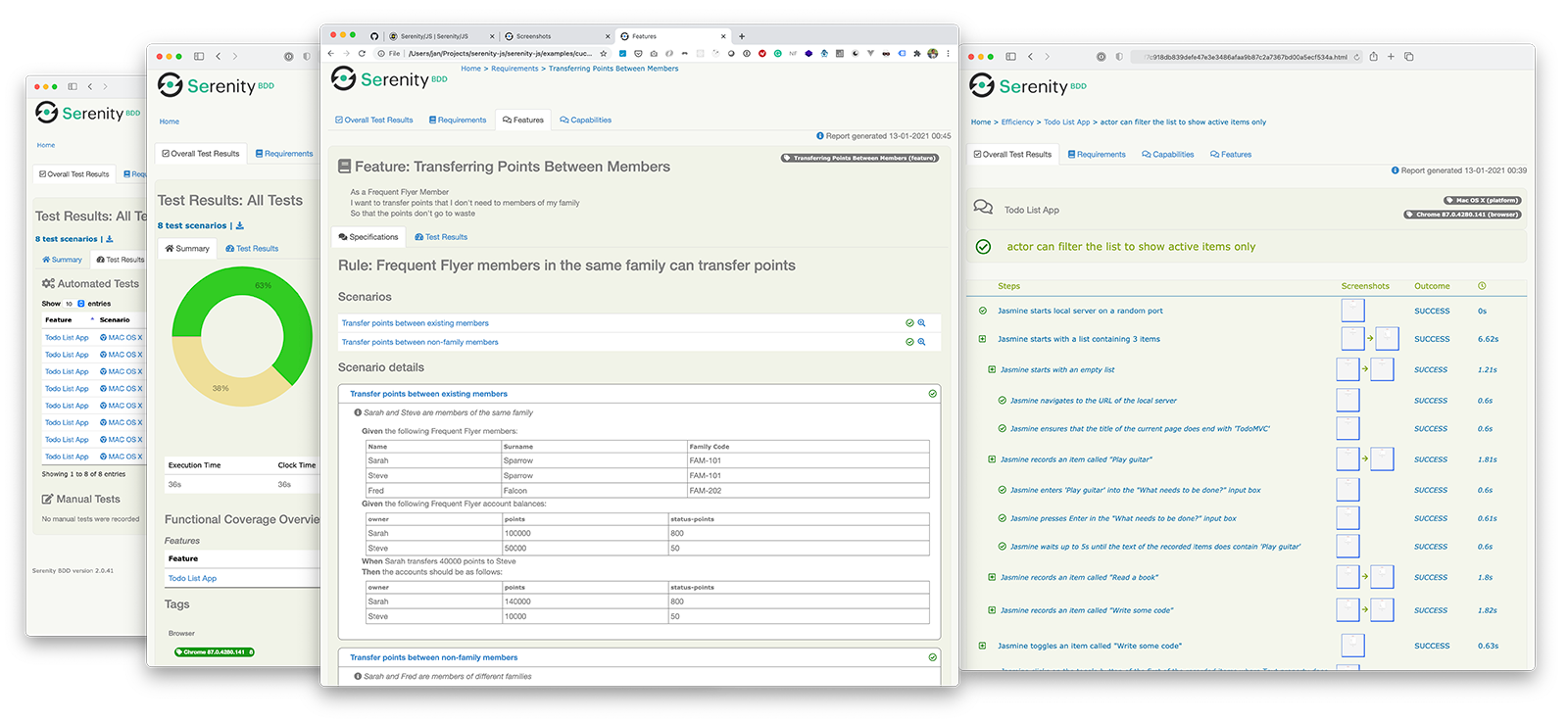

Using Serenity/JS

Serenity/JS is an open-source framework designed to make acceptance and regression testing of complex software systems faster, more collaborative, and easier to scale.

For WebdriverIO test suites, Serenity/JS offers:

- Enhanced Reporting - You can use Serenity/JS as a drop-in replacement of any built-in WebdriverIO framework to produce in-depth test execution reports and living documentation of your project.

- Screenplay Pattern APIs - To make your test code portable and reusable across projects and teams, Serenity/JS gives you an optional abstraction layer on top of native WebdriverIO APIs.

- Integration Libraries - For test suites that follow the Screenplay Pattern, Serenity/JS also provides optional integration libraries to help you write API tests, manage local servers, perform assertions, and more!

Installing Serenity/JS

To add Serenity/JS to an existing WebdriverIO project, install the following Serenity/JS modules from NPM:

- npm

- Yarn

- pnpm

npm install @serenity-js/{core,web,webdriverio,assertions,console-reporter,serenity-bdd} --save-dev

yarn add @serenity-js/{core,web,webdriverio,assertions,console-reporter,serenity-bdd} --dev

pnpm add @serenity-js/{core,web,webdriverio,assertions,console-reporter,serenity-bdd} --save-dev

Learn more about Serenity/JS modules:

@serenity-js/core@serenity-js/web@serenity-js/webdriverio@serenity-js/assertions@serenity-js/console-reporter@serenity-js/serenity-bdd

Configuring Serenity/JS

To enable integration with Serenity/JS, configure WebdriverIO as follows:

- TypeScript

- JavaScript

import { WebdriverIOConfig } from '@serenity-js/webdriverio';

export const config: WebdriverIOConfig = {

// Tell WebdriverIO to use Serenity/JS framework

framework: '@serenity-js/webdriverio',

// Serenity/JS configuration

serenity: {

// Configure Serenity/JS to use the appropriate adapter for your test runner

runner: 'cucumber',

// runner: 'mocha',

// runner: 'jasmine',

// Register Serenity/JS reporting services, a.k.a. the "stage crew"

crew: [

// Optional, print test execution results to standard output

'@serenity-js/console-reporter',

// Optional, produce Serenity BDD reports and living documentation (HTML)

'@serenity-js/serenity-bdd',

[ '@serenity-js/core:ArtifactArchiver', { outputDirectory: 'target/site/serenity' } ],

// Optional, automatically capture screenshots upon interaction failure

[ '@serenity-js/web:Photographer', { strategy: 'TakePhotosOfFailures' } ],

]

},

// Configure your Cucumber runner

cucumberOpts: {

// see Cucumber configuration options below

},

// ... or Jasmine runner

jasmineOpts: {

// see Jasmine configuration options below

},

// ... or Mocha runner

mochaOpts: {

// see Mocha configuration options below

},

runner: 'local',

// Any other WebdriverIO configuration

};

export const config = {

// Tell WebdriverIO to use Serenity/JS framework

framework: '@serenity-js/webdriverio',

// Serenity/JS configuration

serenity: {

// Configure Serenity/JS to use the appropriate adapter for your test runner

runner: 'cucumber',

// runner: 'mocha',

// runner: 'jasmine',

// Register Serenity/JS reporting services, a.k.a. the "stage crew"

crew: [

'@serenity-js/console-reporter',

'@serenity-js/serenity-bdd',

[ '@serenity-js/core:ArtifactArchiver', { outputDirectory: 'target/site/serenity' } ],

[ '@serenity-js/web:Photographer', { strategy: 'TakePhotosOfFailures' } ],

]

},

// Configure your Cucumber runner

cucumberOpts: {

// see Cucumber configuration options below

},

// ... or Jasmine runner

jasmineOpts: {

// see Jasmine configuration options below

},

// ... or Mocha runner

mochaOpts: {

// see Mocha configuration options below

},

runner: 'local',

// Any other WebdriverIO configuration

};

Learn more about:

- Serenity/JS Cucumber configuration options

- Serenity/JS Jasmine configuration options

- Serenity/JS Mocha configuration options

- WebdriverIO configuration file

Producing Serenity BDD reports and living documentation

Serenity BDD reports and living documentation are generated by Serenity BDD CLI,

a Java program downloaded and managed by the @serenity-js/serenity-bdd module.

To produce Serenity BDD reports, your test suite must:

- download the Serenity BDD CLI, by calling

serenity-bdd updatewhich caches the CLIjarlocally - produce intermediate Serenity BDD

.jsonreports, by registeringSerenityBDDReporteras per the configuration instructions - invoke the Serenity BDD CLI when you want to produce the report, by calling

serenity-bdd run

The pattern used by all the Serenity/JS Project Templates relies on using:

- a

postinstallNPM script to download the Serenity BDD CLI npm-failsafeto run the reporting process even if the test suite itself has failed (which is precisely when you need test reports the most...).rimrafas a convenience method to remove any test reports left over from the previous run

{

"scripts": {

"postinstall": "serenity-bdd update",

"clean": "rimraf target",

"test": "failsafe clean test:execute test:report",

"test:execute": "wdio wdio.conf.ts",

"test:report": "serenity-bdd run"

}

}

To learn more about the SerenityBDDReporter, please consult:

- installation instructions in

@serenity-js/serenity-bdddocumentation, - configuration examples in

SerenityBDDReporterAPI docs, - Serenity/JS examples on GitHub.

Using Serenity/JS Screenplay Pattern APIs

The Screenplay Pattern is an innovative, user-centred approach to writing high-quality automated acceptance tests. It steers you towards an effective use of layers of abstraction, helps your test scenarios capture the business vernacular of your domain, and encourages good testing and software engineering habits on your team.

By default, when you register @serenity-js/webdriverio as your WebdriverIO framework,

Serenity/JS configures a default cast of actors,

where every actor can:

This should be enough to help you get started with introducing test scenarios that follow the Screenplay Pattern even to an existing test suite, for example:

import { actorCalled } from '@serenity-js/core'

import { Navigate, Page } from '@serenity-js/web'

import { Ensure, equals } from '@serenity-js/assertions'

describe('My awesome website', () => {

it('can have test scenarios that follow the Screenplay Pattern', async () => {

await actorCalled('Alice').attemptsTo(

Navigate.to(`https://webdriver.io`),

Ensure.that(

Page.current().title(),

equals(`WebdriverIO · Next-gen browser and mobile automation test framework for Node.js | WebdriverIO`)

),

)

})

it('can have non-Screenplay scenarios too', async () => {

await browser.url('https://webdriver.io')

await expect(browser)

.toHaveTitle('WebdriverIO · Next-gen browser and mobile automation test framework for Node.js | WebdriverIO')

})

})

To learn more about the Screenplay Pattern, check out: